Stanford scientists develop brain implant capable of 'decoding inner speech'

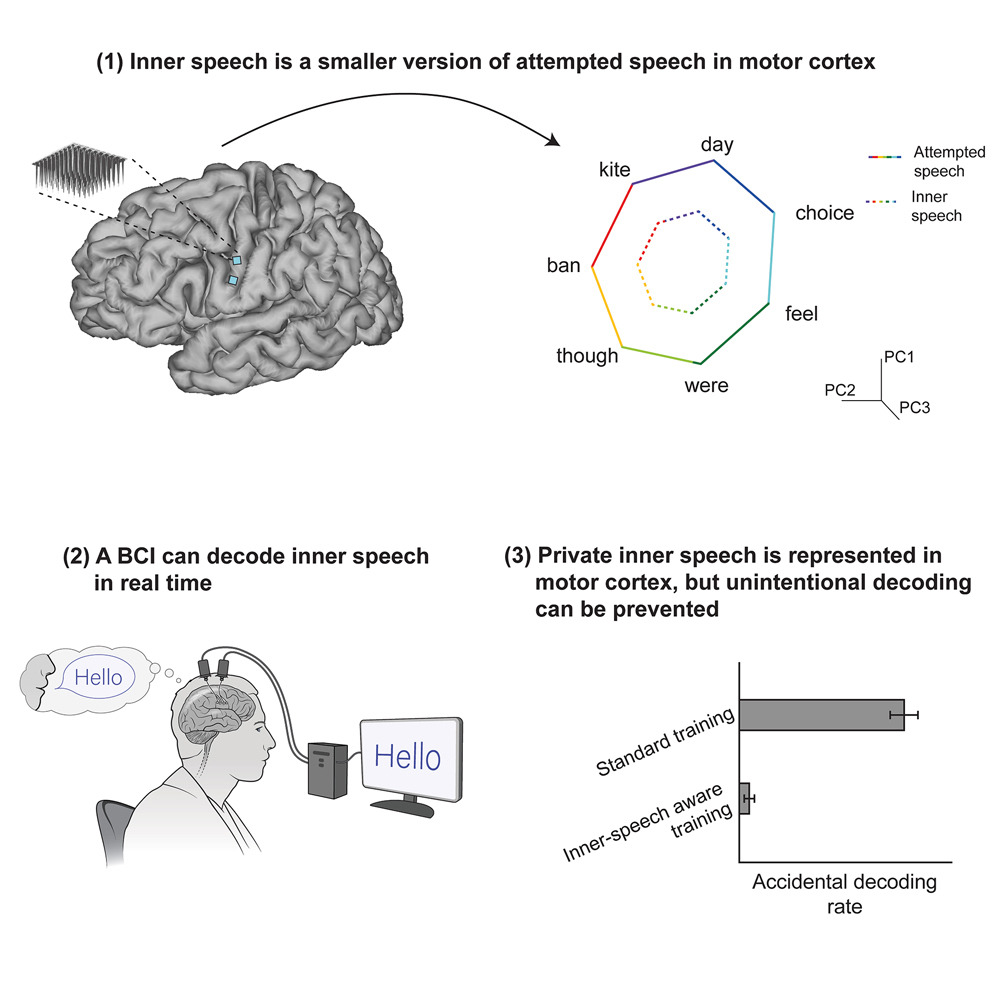

Stanford researchers advanced brain-computer interface (BCI) technology by decoding inner speech—internal monologue—with implants reading neural signals in four people with severe movement disorders.

Unlike past devices requiring strenuous attempts to vocalize, this system decoded silently imagined words, drawing from a 125,000-word vocabulary with real-time accuracy rates ranging from 46% to 74%.

The study confirmed that the motor cortex activates similarly during both attempted and inner speech, though inner speech produces weaker signals. By isolating those signals, researchers translated internal sentences into audio, enabling speech without movement.

One participant had nearly 99% accuracy using a mental "password"—“chitty chitty bang bang”—to enable or disable the system, a breakthrough against involuntary decoding of private thoughts.

The technology revealed cases of unintended inner speech, such as counting shapes, raising red flags around cognitive privacy. In response, the team implemented privacy safeguards, including filtering out inner speech data and requiring user intent to initiate decoding.

Lead researcher Kunz acknowledged ethical risks but emphasized the focus remains on aiding people who can’t speak. However, experts warned that expanding capabilities bring society closer to an era of "brain transparency," where unspoken thoughts could be exposed.